|

Optical flow estimation forms the core of several computer vision tasks and its estimation requires accurate spatial and temporal gradient information.

However, if there are fast-moving objects in the scene or if the camera moves rapidly, then the acquired images will suffer from motion blur, which will lead to poor optical flow estimation.

Such challenging cases can be handled by event sensors which are a novel generation of sensors that acquire pixel-level brightness changes as binary events at a very high temporal resolution.

Brightness constancy constraint, which is the basis of several optical flow algorithms cannot be directly used on event sensors making it challenging to estimate optical flow.

We overcome this challenge by imposing brightness constancy constraint on intensity images predicted from event sensor data.

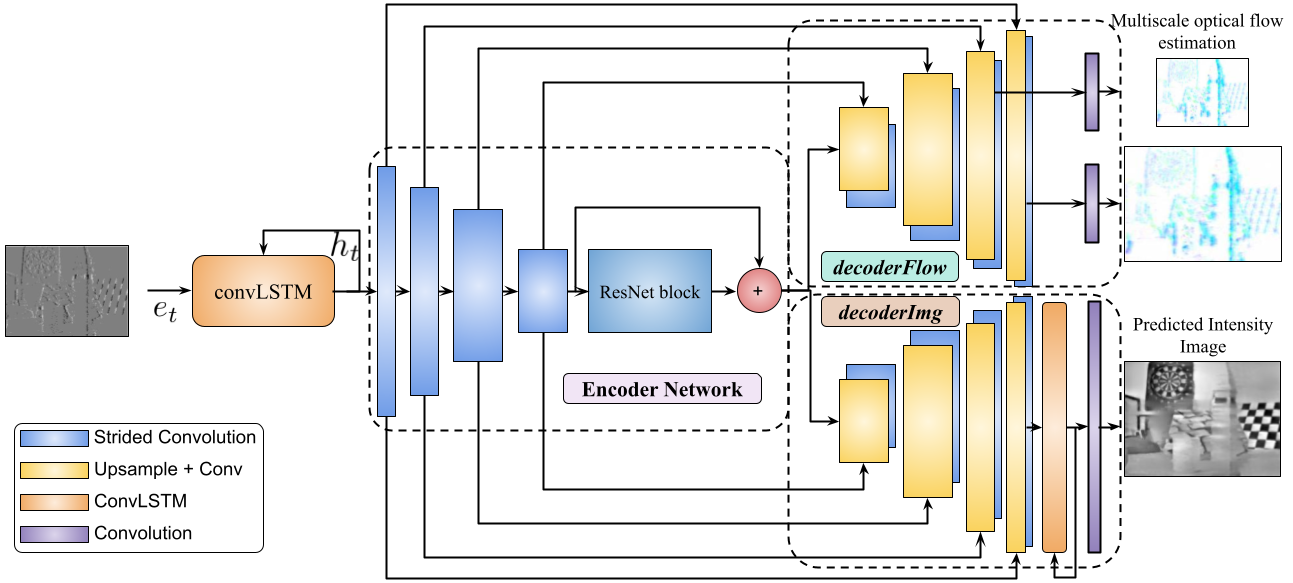

For this task, we design a recurrent neural network that jointly predicts a sparse optical flow and intensity images from the event data.

While intensity estimation is supervised using ground truth frames, optical flow estimation is self-supervised using the predicted intensity frames.

However, in our case the temporal resolution of the ground truth intensity frames is far lower than the temporal resolution of the predicted intensity frames, making it challenging to supervise.

As we use recurrent neural network, such a challenge can be overcome by sharing the weights for each of the predicted intensity frames.

Quantitatively our predicted optical flow is better than previously proposed algorithms for optical flow estimation from event sensors.

We also show our algorithm’s robustness against challenging cases of fast motion and high dynamic range scenes.

|